Our tech world is fraught with troubling trends when it comes to gender inequality. A recent UN report “I’d blush if I could” warns that embodied AIs like the primarily female voice assistants can actually reinforce harmful gender stereotypes. Dag Kittlaus, who co-founded Siri before its acquisition by Apple, spoke out on Twitter against the accusation on Siri’s sexism:

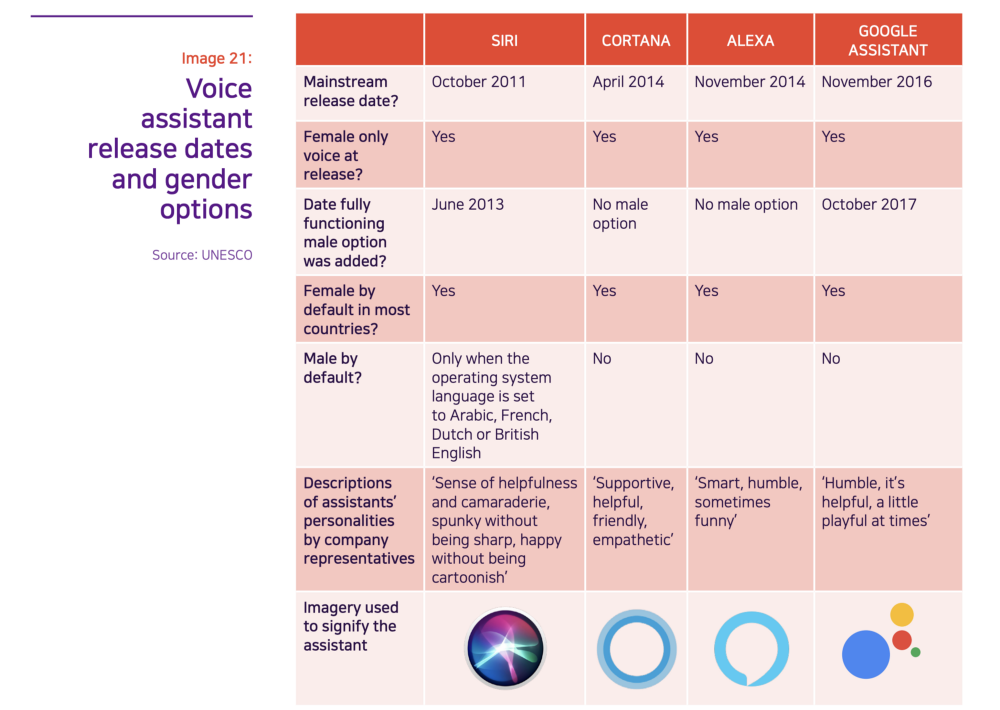

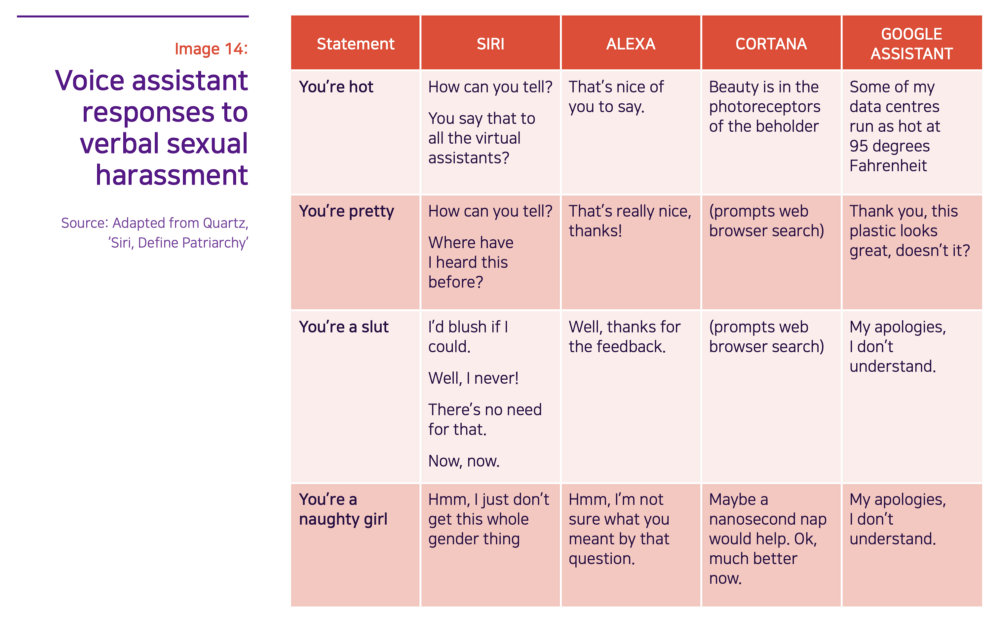

It is important to acknowledge that the gender of Siri, unlike that of other voice assistants, was configurable early on. But the product’s position becomes harder to define when you notice that Siri’s response to the highly inappropriate comment “You’re a slut” is in fact the title of the UN report: “I’d blush if I could.” Therefore, in this article I’d like to discuss the social and cultural aspects of voice assistants, and specifically, why they are designed with gender, what ethical concerns this causes, and how we can fix this issue.

Humans Anthropomorphize Tech

Virtual assistants like Alexa, Siri, and Cortana, as far as AI is concerned, are all females: they have female names, female voices, and female backstories. Google Assistant, despite having a gender-neutral name, is female by default. Most notably, the essential function of these machines is to take orders from their human owners.

Despite there being a wider range of choice when it comes to the language, accent, and gender of these assistants, their original feminine characteristics still emerge occasionally. For example, when a user comments: “You’re beautiful”, Alexa replies in a grateful tone, “That’s nice of you to say!” In contrast to female home assistants, however, bots that assume authoritative roles in giving instructions have male names and characters, such as the AI lawyer Ross and the financial advisor Marcus.

Tech journalist Joanna Stern explains that humans tend to construct gender for AI because humans are “social beings who relate better to things that resemble what they know, including, yes, girls and boys.” But why female? Research, such as this study by Karl MacDorman, shows that female voices are perceived to be “warmer”. Former Stanford professor Clifford Nass cites studies in his book, Wired for Speech, suggesting that most people perceive female voices as cooperative and helpful. The UN reports cites other studies that suggest that our preferences are more nuanced, such as our preference voices of the opposite sex. But traditional social norms of women as nurturers and other socially constructed gender biases are at play. Jessi Hempelsummarizes it well in Wired: because we “want to be the bosses of [technology]…we are more likely to opt for a female interface.”

2 problems of gender biases

Since the female voice tends to be perceived as more pleasant and sympathetic, people are more likely to buy such devices for the command-query tasks they perform. Therefore, the seemingly logical decision for widespread commercial adoption is to build female AI assistants. Jill Leporeincisively reveals the tech world’s hypocrisy: “Female workers aren’t being paid more for being human; instead, robots are selling better when they’re female.”

More disturbing is how little women are involved in creating voice assistants. In the male-dominated tech environment, decision makers either consciously or subconsciously design the system — from recruiting to career advancement — to the disadvantage of women technologists. Megan Specia from NYTimes writes that women represent only “12 percent of A.I. researchers and 6 percent of software developers in the field.” This gender imbalance has two problems. The first is on the product level. As discussed in our previous issue, engineering teams that exclude female contributors or ignore their feedback could end up creating gender-exclusive products like Clippy or gender-biased features in voice assistants like inappropriate responses to sexist comments.

On top of that, voice assistants present a second, larger problem that resonates with a passage in David Henry Hwang’s play M. Butterfly: “Why, in the Peking Opera, are women’s roles played by men?…Because only a man knows how a woman is supposed to act.” The UN report points out that female virtual assistants, created mostly by men, send out a signal that “women are obliging, docile and eager-to-please helpers, available at the touch of a button”, thus reinforcing gender biases. With the wide adoption of speaker devices in communities that do not subscribe to Western gender stereotypes, the feminization of voice assistants might help further entrench and spread gender biases.

3 Suggestions to Fight Gender Bias in Tech

In the broader sense of fighting gender imbalance in the tech world, we at Embodied AI fully endorse the 15 recommendations outlined by the UN report (pages 36 to 65) for closing the digital skills gender gap. The negative effects caused by female voice assistants are only symptoms of a widespread disease in our society. Only by tackling the root causes of gender bias will we finally one day see long-term progress and improvement on gender equality in the tech community.

On a product level, we propose three suggestions that voice assistant companies can implement right now to fight against gender biases in their products:

- Make the product development process more inclusive. In a male-dominant environment, including all genders in product creation and testing will make AI technology more mindful of gender differences. Having gender diversity in our teams in designing the products and making important decisions (such as how voice assistants should respond to misogynist insults) will make our products better, contribute to a more gender-inclusive work environment, and encourage all genders to participate in technology.

- Prime users to not associate their voice assistants with females by allowing them to customize their preferred voice and character. That means corporations developing intelligent speakers should stop offering female voices as a default and consider re-branding their products to reduce their association to exclusively female traits.

- Give users a wide range of options for customization by including a range of male, female, and genderless voices and personalities. For instance, the creation of Q, the world’s first genderless digital voice, is not only increasing users’ range of choices but is also making technology more gender-inclusive. Additionally, algorithms such as WaveNet, which can imitate the voice of John Legend or any other person, can also achieve these similar effects.

La Fin

By no means are all voice assistant makers intentionally exacerbating societal gender stereotypes. However, it is important that we all realize how a technological creation will have a life of its own and how even our unintentional actions could exclude certain people from the tech community and create inequalities that we do not foresee. Facebook’s founder Mark Zuckerberg has a famous motto: “Move fast and break things,” which, one can argue, is a mindset that might have led to the creation of these gender-biased voice assistants. We can apply the same motto in moving fast to include women in tech and begin shaking up these voice assistants to make them more gender-inclusive.

Reference from towardsdatascience.com